Download Datasets from Kaggle on Google Colab

A tutorial about how to set up and use the Kaggle API to download a dataset from Kaggle on Google Colab.

Google Colab is a platform on which you can run GPU (and TPU) accelerated programs in a jupyter-notebook like environment. As a result it is ideal for machine learning education and basic research. The platform is free to use and it has tensorflow and fastai pre-installed.

However, before we can train any machine learning models we need to get data. Kaggle is a platform from which you can download a lot of different datasets, that can be used for machine learning. In this blog post I want to show how to download data from Kaggle on Google Colab. It consists of the following steps:

- Set up the Kaggle API

- Download the data

Set up the Kaggle API

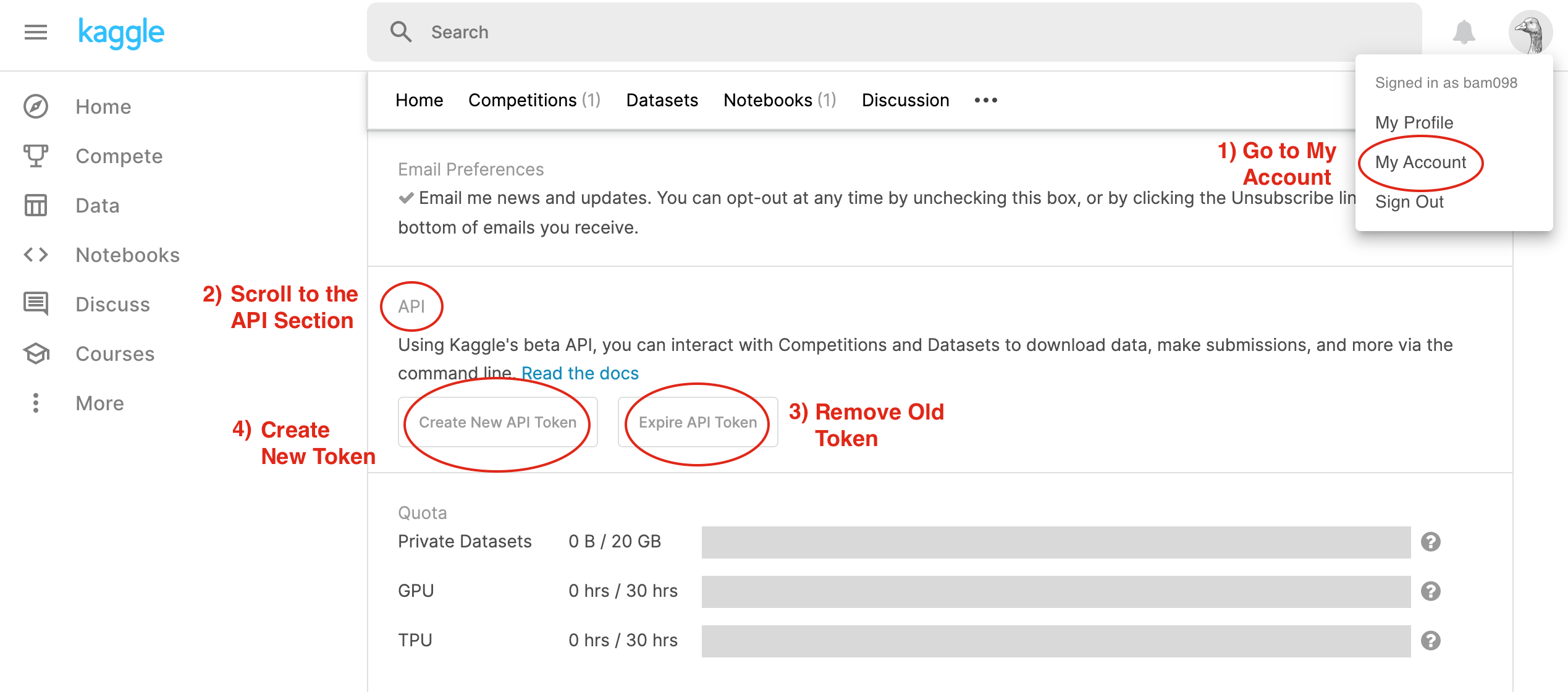

There are several ways to download data from Kaggle. An easy way is to use the Kaggle API. To set up the Kaggle API on Google Colab we need to run several steps. First of all we need a Kaggle API token. If you already have one, you can simply use it. However, if you do not have one or you want to create a new one, you need to do the following:

- You need to log into Kaggle and go to My Account. Then scroll down to the API section and click on Expire API Token to remove previous tokens.

- Then click on Create New API Token. It will download a

kaggle.jsonfile on your local machine.

When you have the kaggle.json file, you can set up Kaggle on Google Colab. Therefor log into Google Colab and create a new notebook there. Then you need to execute the following steps.

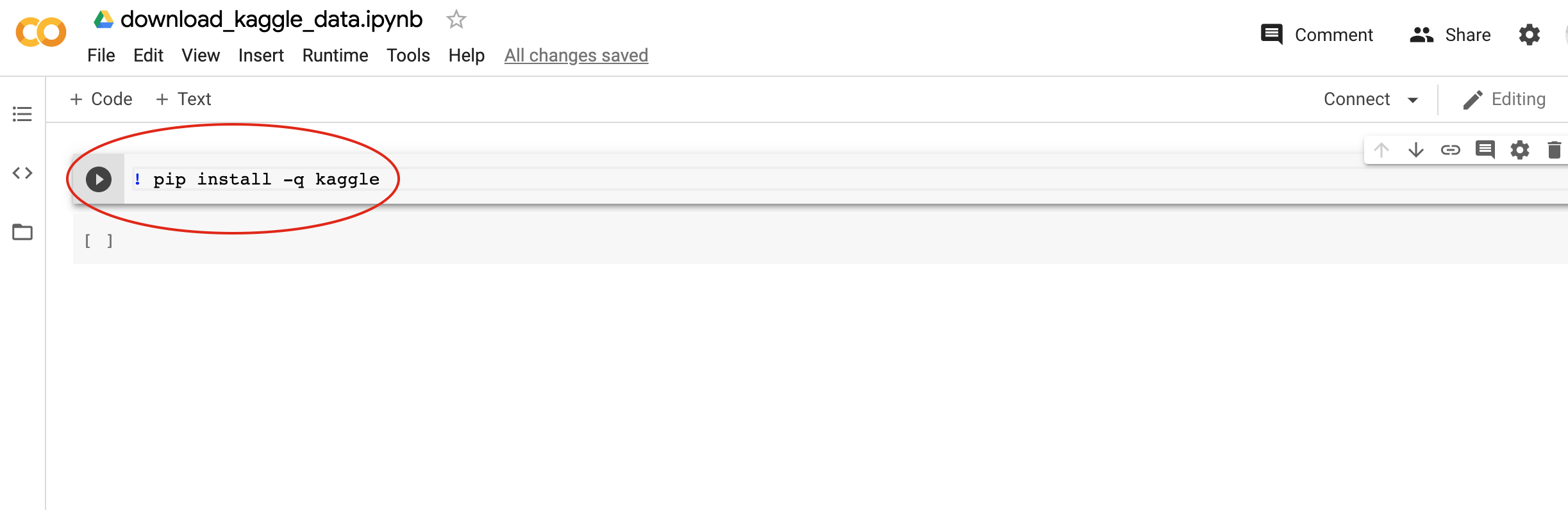

First of all we need to install the kaggle package on Google Colab. Therefor run the following code in a Google Colab cell.

! pip install -q kaggle

Next we need to upload the kaggle.json file. We can do this by running the following code, which will trigger a prompt that let's you upload a file.

from google.colab import files

files.upload()

Since all the data we upload to Google Colab is lost after closing Google Colab, we need to save all data to our Google Drive. To mount our Google Drive space we need to run the following code. Goolge Colab will ask you to enter an authorization code. You can get one by clicking the corresponding link that appears after running the code.

from google.colab import drive

drive.mount('/content/gdrive', force_remount=True)

The home folder of your Google Drive is located under /content/gdrive/My Drive. We create a folder named .kaggle in that home folder. There we want to store the kaggle.json file.

! mkdir /content/gdrive/My\ Drive/.kaggle/

After creating the .kaggle folder we can move the kaggle.json file there.

! mv kaggle.json /content/gdrive/My\ Drive/.kaggle/

Change the permisson of the file.

! chmod 600 /content/gdrive/My\ Drive/.kaggle/kaggle.json

However, the kaggle.json file is actually not in the correct location. The kaggle package looks for it under /root. I just wanted to store it in the home folder of our Google Drive, so that it is not lost after we close Google Colab. As a result we do not need to upload the kaggle.json file again next time we want to download data from Kaggle. We can simply copy it from the .kaggle folder in our Google Drive home folder instead.

Let's copy the file from there to /root now.

! cp -r /content/gdrive/My\ Drive/.kaggle/ /root/

Now everything should be ready. However, I had some issues with the kaggle package in the following. It did not seem to be installed correctly. If you get the same problem, you can usually solve it by re-installing the package. I used version 1.5.6 of the package here. If you need another version, you can look up which one you need here. Then simply adjust the following command by replacing 1.5.6 with the version number you picked. If you do not have any problems in the following, you can skip this step.

! pip uninstall -y kaggle

! pip install --upgrade pip

! pip install kaggle==1.5.6

! kaggle -v

That's it! To check if we set up the Kaggle API correctly, we can run the following command. You should be able to see a list of the Kaggle datasets as output. If you have the a problem with the kaggle package as mentioned above, you need to re-install it as described there.

! kaggle datasets list

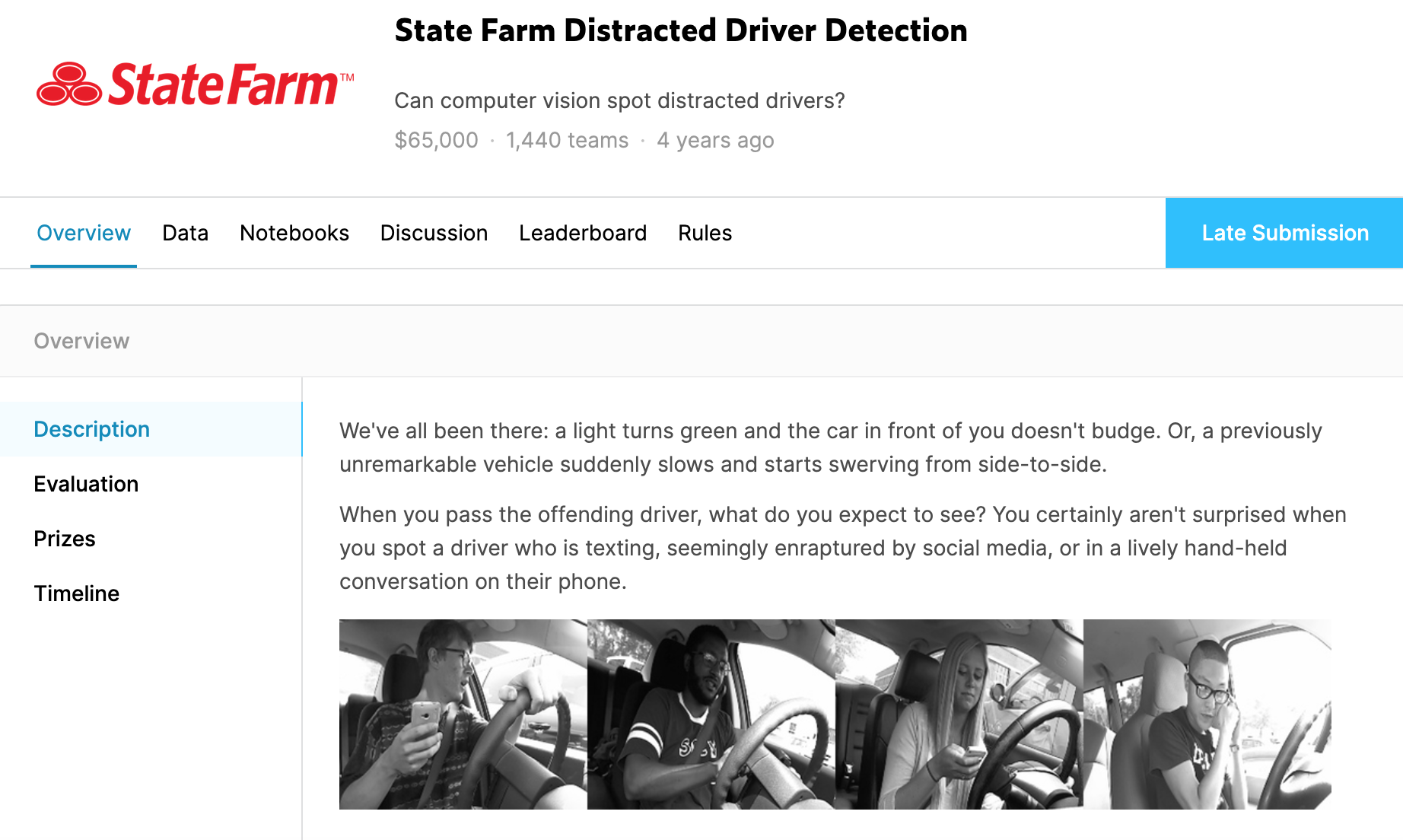

Now we can download data from Kaggle. As an example I chose the distracted driver detection dataset. It has the following URL:

https://www.kaggle.com/c/state-farm-distracted-driver-detection

The name of the dataset is the last part of that URL: state-farm-distracted-driver-detection. We need the name for the command to download the data, which is shown in the following.

! kaggle competitions download -c state-farm-distracted-driver-detection

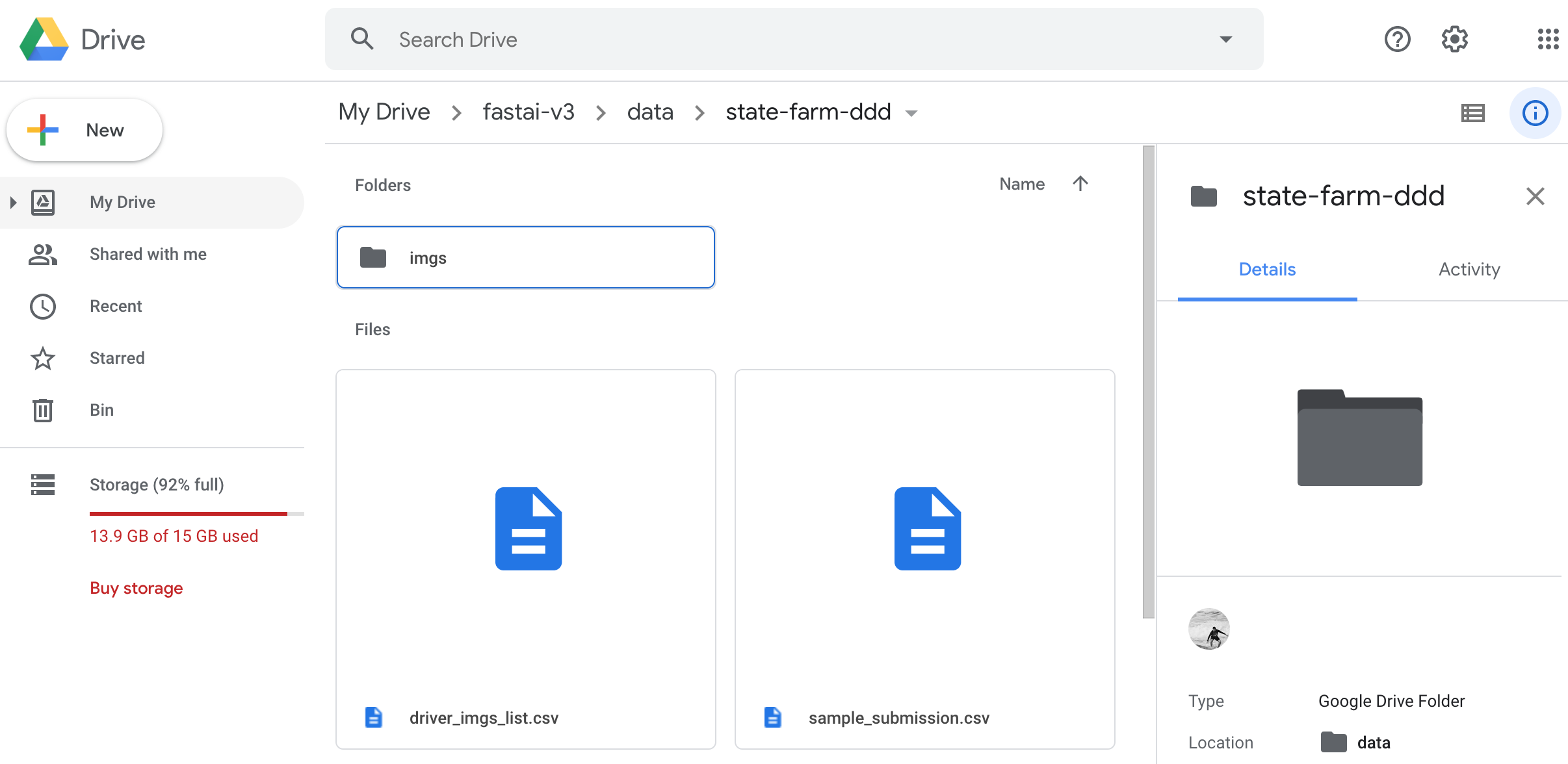

A state-farm-distracted-driver-detection.zip should have been downloaded. Next let's create a folder in our Google Drive in which we want to put the data. The following path already existed in my Google Drive from previous projects: /content/gdrive/ My Drive/fastai-v3/data. I decided to store the distracted driver detection dataset under this data folder as well. However, you can put the data where ever you want as long as it is in your Google Drive.

! mkdir /content/gdrive/My\ Drive/fastai-v3/data/state-farm-ddd

Move the zip file to the created folder.

! mv state-farm-distracted-driver-detection.zip /content/gdrive/My\ Drive/fastai-v3/data/state-farm-ddd

Go to that folder. !cd did not work for me here but %cd did (you can read about it here).

%cd /content/gdrive/My\ Drive/fastai-v3/data/state-farm-ddd

Make sure we are in the correct folder.

! pwd

Now unzip the data in that folder.

! unzip state-farm-distracted-driver-detection.zip -d .

The data should be there now. However, it happened to me that it was actually not immediately there after the unzipping finished. Apparently, Google Drive sometimes needs some time until the files are available. So, we do not need to do anything. We just need to wait. To check if all the files are there we should go to Google Drive from time to time and look into the folder. When I tried it, the sample_submission.csv file was always the last file. So, when this file was there, I knew all the files were there. It is important to check this directly in Google Drive and not in the folder view in Google Colab, because Google Colab seems to show the files although Google Drive does not have them yet.

When all the files are there, we can remove the zip file.

! rm state-farm-distracted-driver-detection.zip

And we are finished!